|

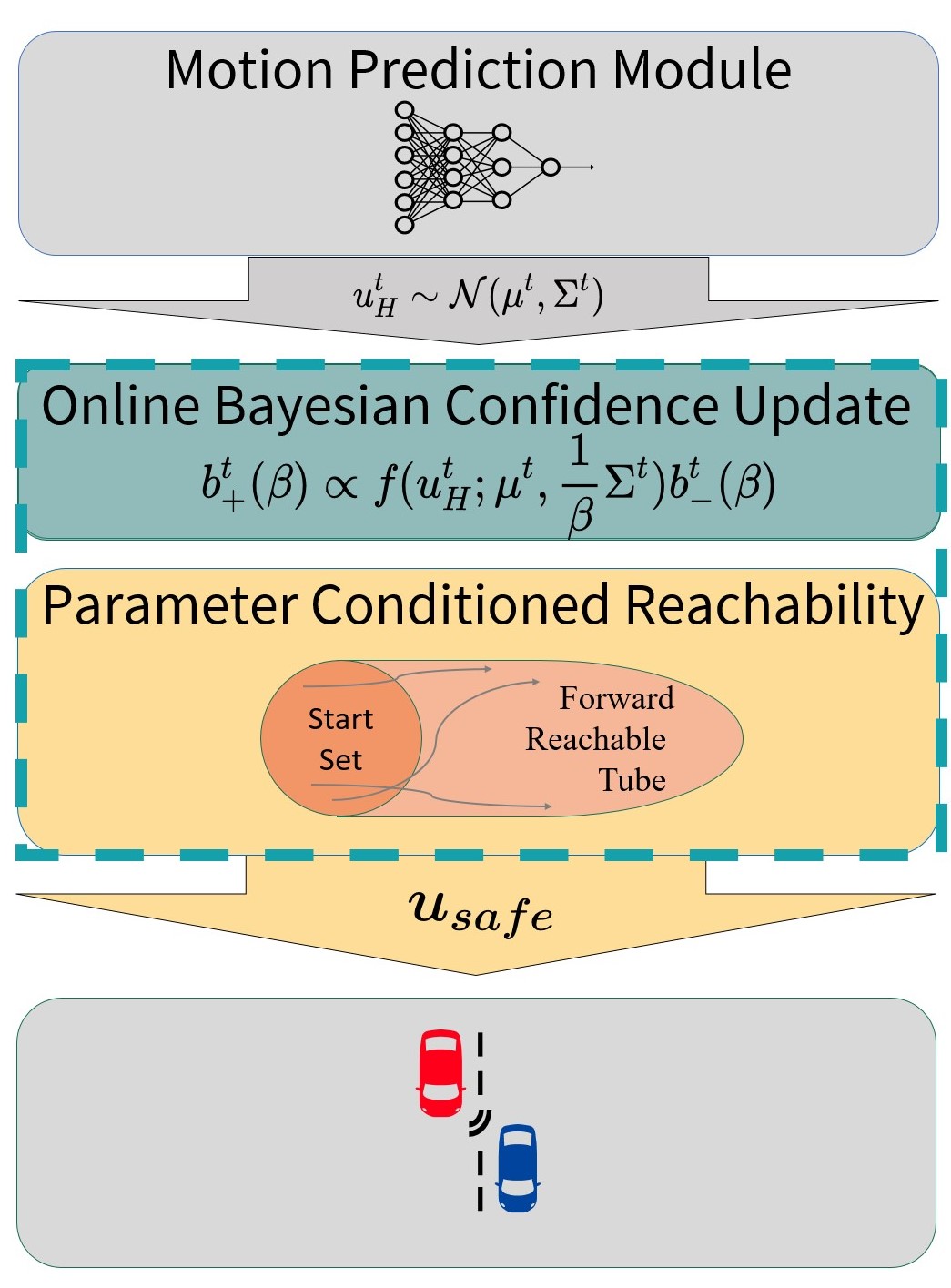

Robots such as autonomous vehicles and assistive manipulators are increasingly operating in dynamic environments and close physical proximity to people. In such scenarios, the robot can leverage a human motion predictor to predict their future states and plan safe and efficient trajectories. However, no model is ever perfect -- when the observed human behavior deviates from the model predictions, the robot might plan unsafe maneuvers. Recent works have explored maintaining a confidence parameter in the human model to overcome this challenge, wherein the predicted human actions are tempered online based on the likelihood of the observed human action under the prediction model. This has opened up a new research challenge, i.e., how to compute the future human states online as the confidence parameter changes? In this work, we propose a Hamilton-Jacobi (HJ) reachability-based approach to overcome this challenge. Treating the confidence parameter as a virtual state in the system, we compute a parameter-conditioned forward reachable tube (FRT) that provides the future human states as a function of the confidence parameter. Online, as the confidence parameter changes, we can simply query the corresponding FRT, and use it to update the robot plan. Computing parameter-conditioned FRT corresponds to an (offline) high-dimensional reachability problem, which we solve by leveraging recent advances in data-driven reachability analysis. Overall, our framework enables online maintenance and updates of safety assurances in human-robot interaction scenarios, even when the human prediction model is incorrect. We demonstrate our approach in several safety-critical autonomous driving scenarios, involving a state-of-the-art deep learning-based prediction model.

|