Kensuke Nakamura

I am a Ph.D. student at the Carnegie Mellon University Robotics Institute where I am advised by Prof. Andrea Bajcsy. My research leverages the synergy between optimal control and generative models to allow robots to safely operate in unstructured and uncertain environments. I develop theory and algorithms grounded in systems such as autonomous vehicles that use learned trajectory forecasters during planning or robotic manipulators that use world models to understand nuanced safety constraints. I was named a 2025 HRI Pioneer and I am fortunate to be supported by the NSF Graduate Research Fellowship.

Previously, I graduated from Princeton University where I was advised by Jaime Fernández Fisac and Naomi Ehrich Leonard. I’ve also had the pleasure of collaborating with Somil Bansal.

E-mail / Google Scholar / Github / Twitter

news

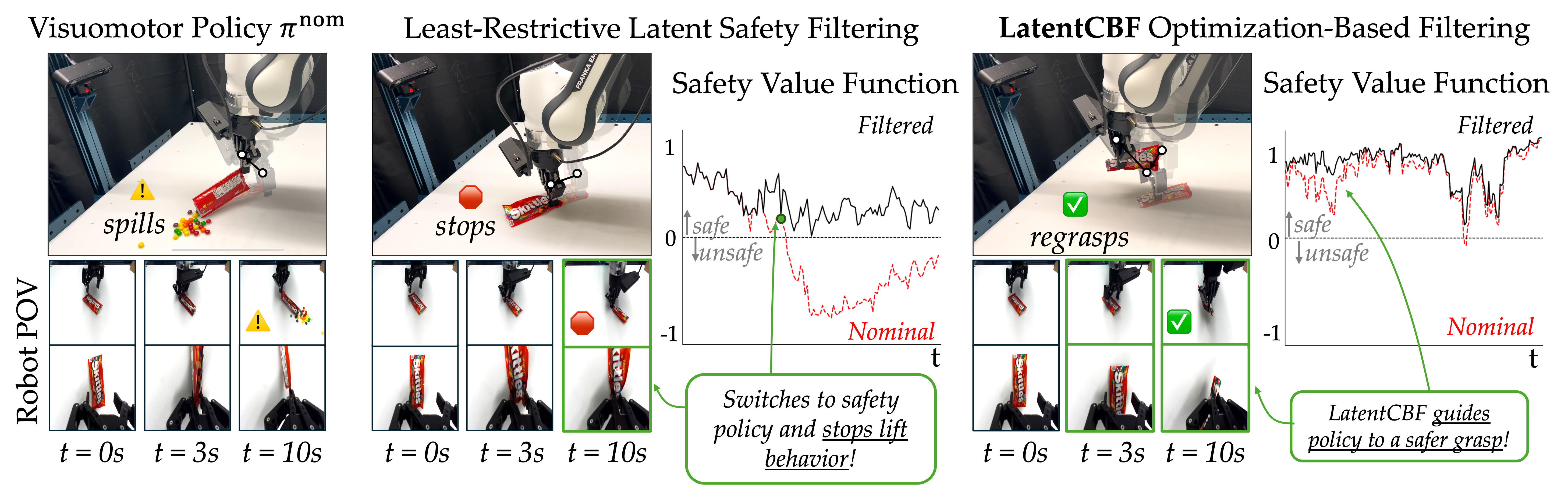

| Nov 23, 2025 | Excited to share that we extended the paradigm of latent safety filtering to optimization-based filters in the style of Control Barrier Functions. While theory tells us extending HJ value functions to act as CBFs should be straightforward, we identified and remedied two key details to make it work in practice. Details in the project website here |

|---|---|

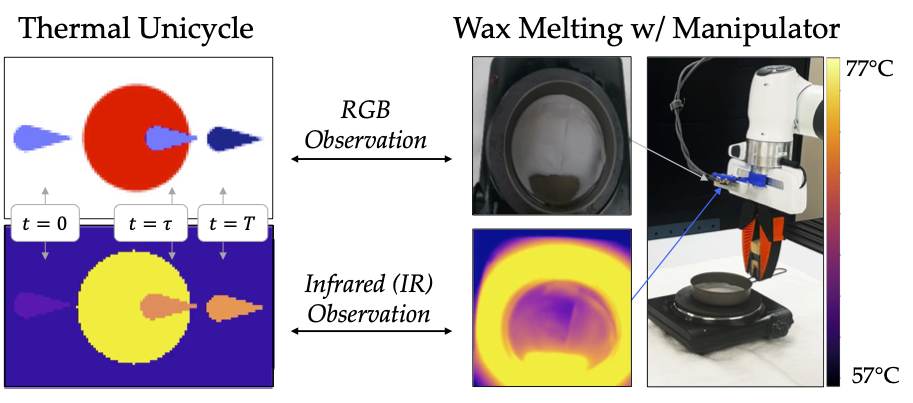

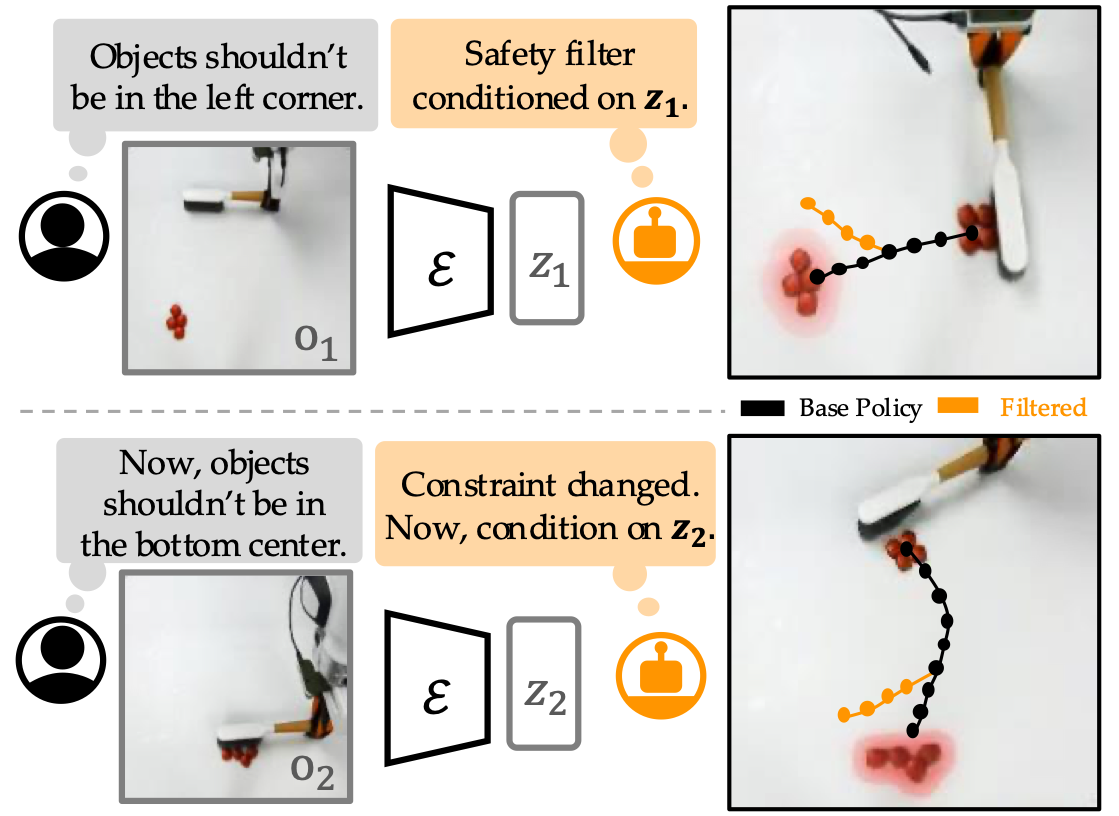

| Oct 01, 2025 | Two new papers extending latent safety filters! In Anysafe we explore how to parameterized latent safety filters in order to flexibly specify constraints at deployment time. In our second work we study temperature-based constraints to investigate how partial observability can degrade the ability of latent safety filters to prevent safety violations. We quantify partial observability in the latent space of self-supervised world models using mutual information and provide a multimodal training strategy for effective filtering even under partial observability! |

| May 01, 2025 | Our new paper strengthens latent safety filters by allowing them to account for out-of-distribution failures by treating regions where a world model has high uncertainty as a safety violation. This work was lead by Junwon Seo and the project website is here. |

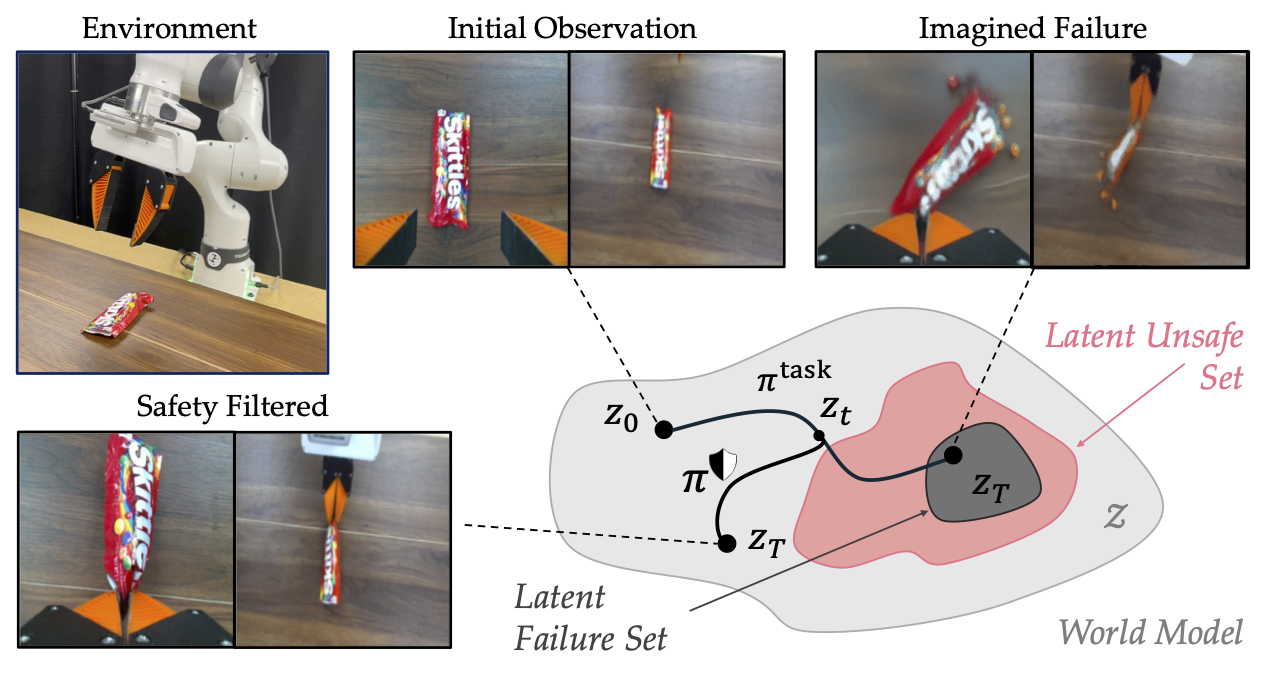

| Apr 23, 2025 | Our paper on generalizing safety analysis for constraints beyond collision-avoidance was just accepted to RSS 2025! |

| Apr 07, 2025 | I gave a talk to the SAIDS lab at USC! Later this month I’ll be giving a talk to the HERALD lab at TU Delft! |

latest posts

selected publications

-

How to Train Your Latent Control Barrier Function: Smooth Safety Filtering Under Hard-to-Model ConstraintsarXiv preprint arXiv:2511.18606, 2025

How to Train Your Latent Control Barrier Function: Smooth Safety Filtering Under Hard-to-Model ConstraintsarXiv preprint arXiv:2511.18606, 2025 -

What You Don’t Know Can Hurt You: How Well do Latent Safety Filters Understand Partially Observable Safety Constraints?arXiv preprint arXiv:2510.06492, 2025

What You Don’t Know Can Hurt You: How Well do Latent Safety Filters Understand Partially Observable Safety Constraints?arXiv preprint arXiv:2510.06492, 2025 -

AnySafe: Adapting Latent Safety Filters at Runtime via Safety Constraint Parameterization in the Latent Space2025

AnySafe: Adapting Latent Safety Filters at Runtime via Safety Constraint Parameterization in the Latent Space2025 -

- RSS

Generalizing Safety Beyond Collision-Avoidance via Latent-Space Reachability AnalysisIn Robotics: Science and Systems, 2025

Generalizing Safety Beyond Collision-Avoidance via Latent-Space Reachability AnalysisIn Robotics: Science and Systems, 2025 - CoRL

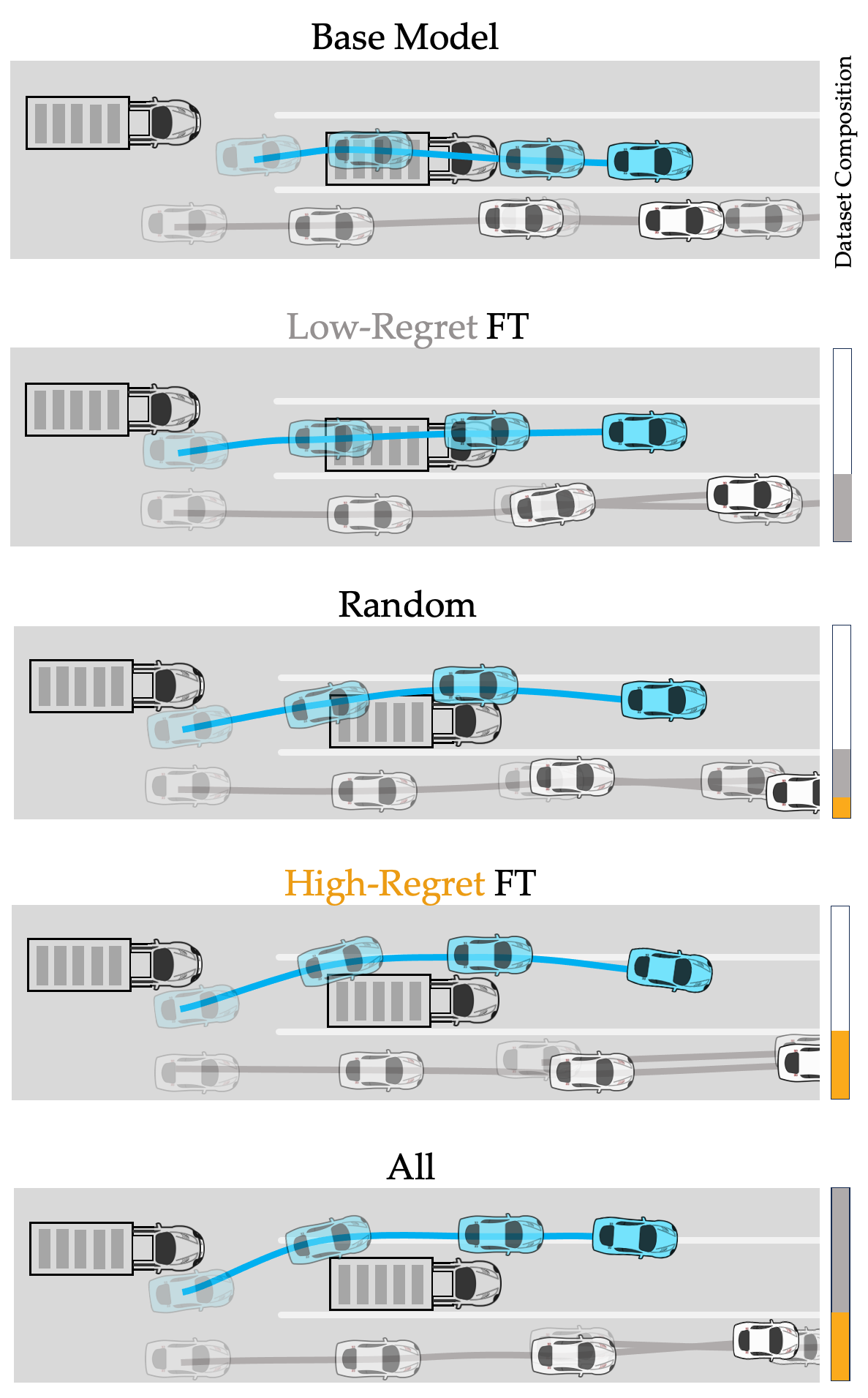

Not All Errors Are Made Equal: A Regret Metric for Detecting System-level Trajectory Prediction FailuresIn 8th Annual Conference on Robot Learning, 2024

Not All Errors Are Made Equal: A Regret Metric for Detecting System-level Trajectory Prediction FailuresIn 8th Annual Conference on Robot Learning, 2024 - CoRL

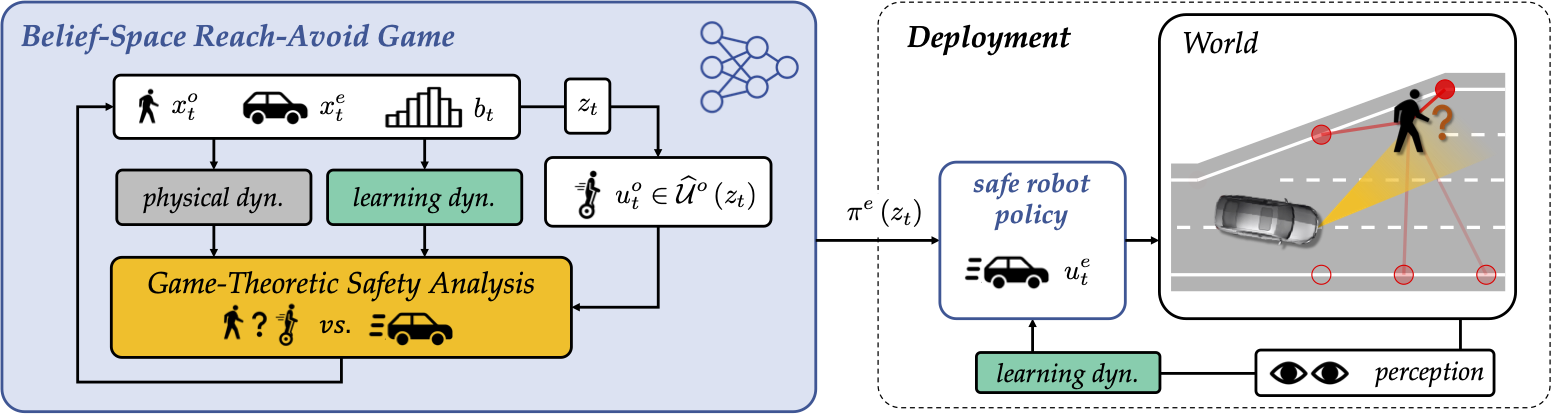

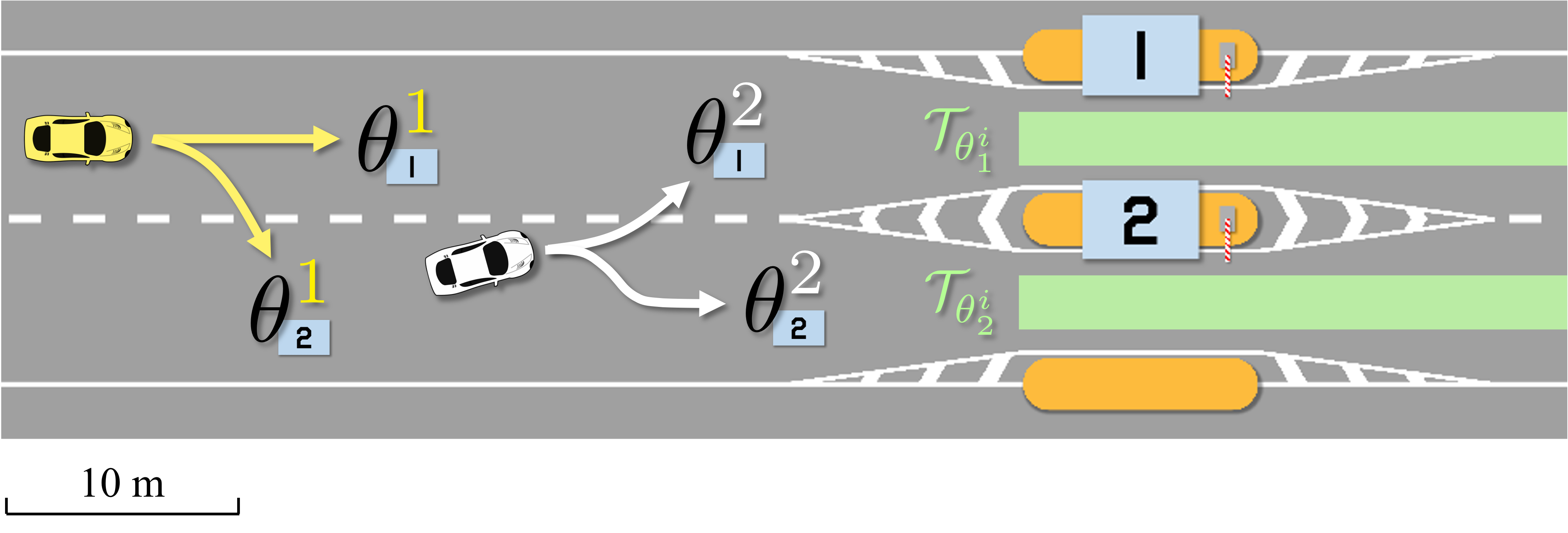

Deception Game: Closing the Safety-Learning Loop in Interactive Robot AutonomyIn 7th Annual Conference on Robot Learning, 2023

Deception Game: Closing the Safety-Learning Loop in Interactive Robot AutonomyIn 7th Annual Conference on Robot Learning, 2023 - CDC

Emergent Coordination through Game-Induced Nonlinear Opinion DynamicsIn 2023 62nd IEEE Conference on Decision and Control (CDC), 2023

Emergent Coordination through Game-Induced Nonlinear Opinion DynamicsIn 2023 62nd IEEE Conference on Decision and Control (CDC), 2023 - ICRA

Online Update of Safety Assurances Using Confidence-Based PredictionsIn 2023 International Conference on Robotics and Automation (ICRA), 2023

Online Update of Safety Assurances Using Confidence-Based PredictionsIn 2023 International Conference on Robotics and Automation (ICRA), 2023